Summary

Social things interacting in trustworthy spaces represent a model for an Internet of Things that is scalable to trillions of devices and still works. This post describes that concept and proposes picos as a platform for building social things.

Humans and other gregarious animals naturally and dynamically form groups. These groups have frequent changes in membership and establish trust requirements based on history and task. Similarly, the Internet of Things (IoT) will be built from devices that be must be able to discover other interesting devices and services, form relationships with them, and build trust over time based on those interactions. One way to think about this problem is to envision things as social and imagine how sociality can help solve some of the hard problems of the IoT.

Previously I've written about a Facebook of Things and a Facebook for My Stuff that describe the idea of social products. This post expands that idea to take it beyond the commercial.

As I mentioned above, humans and other social animals have created a wide variety of social constructs that allow us to not only function, but thrive in environments where we encounter and interact other independent agents—even when those agents are potentially harmful or even malicious. We form groups and, largely, we do it without some central planner putting it all together. Individuals in these groups learn to trust each other, or not, on the basis of social constructions that have evolved over time. Things do fail and security breaks down from time to time, but those are exceptions, not the rule. We're remarkably successful at protecting ourselves from harm and dealing with anomalous behavior from other group members or the environment, while getting things done.

There is no greater example of this than a city. Cities are social systems that grow and evolve. They are remarkably resilient. I've referenced Geoffrey West's remarkable TED talk on the surprising math of cities and corporations before. As West says "you can drop an atom bomb on a city and it will survive."

The most remarkable thing about city planning is perhaps the fact that cities don't really need planning. Cities happen. They are not only dynamic, but spontaneous. The greatness of a city is that it isn't planned. Similarly, the greatness of IoT will be in spontaneous interactions that no one could have foreseen.

My contention is that we want device collections on the Internet of Things to be more like cities, where things are heterarchical and spontaneous, than corporations, where things are hierarchical and planned. Where we've built static networks of devices with a priori determined relationships in the past, we have to create systems that support dynamic group forming based on available resources and goals. Devices on the Internet of Things will often be part of temporary, even transient, groups. For example, a meeting room will need to be constantly aware of its occupants and their devices so it can properly interact with them. I'm calling these groups of social things "trustworthy spaces."

My Electric Car

As a small example of this, consider the following example: suppose I buy an electric car. The car needs to negotiate charging times with the air conditioner, home entertainment system, and so on. The charging time might change every day. There are several hard problems in that scenario, but the one I want to focus on is group forming. Several things need to happen:

- The car must know that it belongs to me. Or, more generally, it has to know it's place in the world.

- The car must be able to discover that there's a group of things that also belong to me and care about power management.

- Other things that belong to me must be able to dynamically evaluate the trustworthiness of the car.

- Members of the group (including the car) must be able to adjust their interactions with each other on the basis of their individual calculations of trustworthiness.

- The car may encounter other devices that misrepresent themselves and their intentions (whether due to fault or outright maliciousness).

- Occasionally, unexpected, even unforeseen events will happen (e.g. a power outage). The car will have to adapt.

We could extend this situation to a group of devices that don't all belong to the same owner too. For example, I'm at my friend's house and want to charge the car.

The requirements outlined above imply several important principles:

- Devices in the system interact as independent agents. They have a unique identity and are capable of maintaining state and running programs.

- Devices have a verifiable provenance that includes significant events from their life-cycle, their relationships with other devices, and a history of their interactions (i.e. a transaction record).

- Devices are able to independently calculate and use the reputation of other actors in the system.

- Devices rely on protecting themselves from other devices rather than a system preventing bad things from happening.

I'm also struck that other factors, like allegiance, might be important, but I'm not sure how at the moment. Provenance and reputation might be general enough to take those things into account.

Trustworthy Spaces

A trustworthy space is an abstract extent within which a group of agents interact, not a physical room or even geographic area. It is trustworthy only to the extent an individual agent deems it so.

In a system of independent agents, trustworthiness is an emergent property of the relationships among a group of devices. Let's unpack that.

When I say "trustworthiness," that doesn't imply a relationship is trustworthy. The trustworthiness might be zero, meaning it's not trusted. When I say "emergent," I mean that this is a property that is derived from other attributes of the relationship.

Trustworthy spaces don't prevent bad things from happening, any more than we can keep every bad thing from happening in social interactions. I think it's important to distinguish safety from security. We are able to evaluate security in relatively static, controlled situations. But usually, when discussing interactions between independent agents, we focus on safety.

There are several properties of trustworthy spaces that are important to their correct functioning:

Decentralized

By definition, a trustworthy space is decentralized because the agents are independent. They may be owned, built, and operated by different entities and their interactions cross those boundaries.

Event-Driven

A trustworthy space is populated by independent agents. Their interactions with one another will be primarily event-driven. Event-based systems are more loosely coupled than other interaction methodologies. Events create a networked pattern of interaction with decentralized decision making. Because new players can enter the event system without others having to give permission, be reconfigured, or be reprogrammed, event-based systems grow organically.

Robust

Trustworthy spaces are robust. That is they don't break under stress. Rather than trying to prevent failure, systems of independent agents have to accept failure and be resilient.

In designed systems we rely on techniques such as transactions to ensure that the system remains in a consistent state. Decentralized systems rely on retries, compensating actions, or just plain giving up when something doesn't work as it should. We have some experience with this in distributed systems that are eventually consistent, but that's just a start at what the IoT needs.

Inconsistencies will happen. Self-healing is the process of recognizing inconsistent states and taking action to remediate the problem. Internal monitoring by the system of anything that might be wrong and then taking corrective action has to be automatic.

AntiFragile

More than robustness, antifragility is the property systems exhibit when they don't just cope with anomalies, but instead thrive in their presence. Organic systems exhibit antifragility; they get better when faced with random events.

IoT devices will operate in environments that are replete with anomalies. Most anomalies are not bad or even errors. They're simply unexpected. Antifragility takes robustness to the next level by not merely tolerating anomalous activity, but using it to adapt and improve.

I don't believe we know a lot about building systems that exhibit antifragility, but I believe that we'll need to develop these techniques for a world with trillions of connected things.

Trust Building

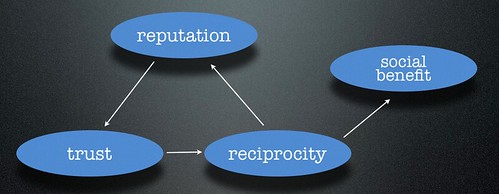

Trust building will be an important factor in trustworthy spaces. Each agent must learn what other agents to trust and to what level. These calculations will be constantly adjusted. Trust, reputation, and reciprocity (interaction) are linked in some very interesting ways. Consider the following diagram from a paper by Mui et al entitled A Computational Model of Trust and Reputation:

We define reputation as the perception about an entity's intentions and norms that it creates through past actions. Trust is a subjective expectation an entity has about another's future behavior based on the history of their encounters. Reciprocity is a mutual exchange of deeds (such as favor or revenge). Social benefit or harm derives from this mutual exchange.

If you want to build a system where entities can trust one another, it must support the creation of reputations since reputation is the foundation of trust. Reputation is based on several factors:

- Provenance—the history of the agent, including a "chain of custody" that says where it's been and what it's done in the past, along with attributes of the agent, verified and unverified.

- Reciprocity—the history of the agent's interaction with other agents. A given agent knows about it's interactions and the outcomes. To the extent they are visible, interactions between other agents can also be used.

Reputation is not static and it might not be a single value. Moreover, reputation is not a global value, but a local one. Every agent continually calculates and evaluates the reputation of every other agent. Transparency is necessary for the creation of reputation.

A Platform for Exploring Social Things

A few weeks ago I wrote about persistent compute objects, or picos. In the introduction to that piece, I write:

Persistent Compute Objects, or picos, are tools for modeling the Internet of Things. A pico represents an entity—something that has a unique identity and a long-lived existence. Picos can represent people, places, things, organizations, and even ideas.

The motivation for picos is to design infrastructure to support the Internet of Things that is decentralized, heterarchical, and interoperable. These three characteristics are essential to a workable solution and are sadly lacking in our current implementations.

Without these three characteristics, it's impossible to build an Internet of Things that respects people's privacy and independence.

Picos are a perfect platform for exploring social products. They come with all the necessary infrastructure built in. Their programmability makes them flexible enough and powerful enough to demonstrate how social products can interact through reputation to create trustworthy spaces.

Benefits of Social Things

Social things, interacting with each other in trustworthy spaces offer significant advantages over static networks of devices:

- Less configuration and set up time since things discover each other and set up mutual interactions on their own.

- More freedom for people to buy devices from different manufactures and have them work together.

- Better overall protection from anomalies, perhaps even systems of devices that thrive in their presence.

Social things are a way of building a true Internet of Things instead of CompuServe of Things.

My thoughts on this topic were influenced by a CyDentity workshop I attended last week put on by the Department of Homeland Security at Rutgers University. In particular, some of the terminology, such as "provenance" and "trustworthy spaces," were things I heard there that gelled with some of my thinking on reputation and social things.