Summary

Persistent compute objects, or picos, are powerful, general-purpose, online computers that can be used to model people, places, organizations, and concepts. This blog post describes the fundamental features of picos.

Update [20131009]: I've updated the CloudOS section to be more complete and better describe the relationship between picos and CloudOS.

In earlier posts, I've talked about something I call a "persistent compute object" or pico. For example, I described how picos would be used to model real-world, physical object as well as concepts in Pot Holes and Picos and subscription patterns for picos in Sharing in SquareTag: Borrowing my Truck. But I've never really described the fundamentals of picos.

The term "personal cloud" has been used recently to describe everything from networks hard drives to cloud-based storage like Dropbox to much broader concepts. Consequently, I find the term "personal cloud" to be ambiguous.

Persistent compute objects, on the other hand, have very specific characteristics and are usable for much more than just a person's online storage. A pico has the following features:

- Identity—they represent a specific entity

- Storage—they persistently encapsulate both structured and unstructured data

- Open event network—they respond to events

- Processing—they run applications autonomously

- Event Channels—they have connections to other picos

- APIs—they provide access to and access other online services

A pico is a small, general-purpose, online computer. In practice they are usually virtualized for scalability's sake, but that's not a fundamental feature. Another way to think about picos is as objects (in the object-oriented programming sense) that have persistent storage and are always online.

In our implementation, picos run inside a container (similar in concept to a Java Web container)y called the Kinetic Rules Engine, or KRE. KRE provides the underlying environment for picos to exist, execute, and communicate.

Picos supported by KRE are all programmed with rules, but rule-based programming is not fundamental. Rules are, however, the most convenient way to handle events. The rule language supported by picos in KRE is the Kinetic Rule Language, or KRL.

Picos are persistent in the sense that they exist from when they are created until they are explicitly deleted. Restarting the KRE container, for example, doesn't change the state of the picos for which is it is responsible. They come back online just as they were when the container was stopped.

Identity

Each pico has a unique identity that represents a person, device, thing, organization, concept, or other identifiable entity. This identity allows the pico to function as an independent agent and create relationships with other picos. The basic identifier is called a Kinetic Entity Number or KEN. KENs are chosen so as to be globally unique. This unique identity is the foundation upon which much of the pico's functionality is based. The pico's identity allows it to function as a peer in a network of picos and is the basis for its addressability. Because identity if foundational, containers are inherently multi-tenanted.

Persistent Storage

Picos encapsulate data. The word "encapsulate" is important because it implies more than merely storing data. Encapsulation implies that the data is stored persistently and that processes running in the pico have access to the data. The only way for the data to be exposed outside the pico is for a function to explicitly expose the value.

The current implementation of picos persists data inside entity variables on a per ruleset basis. That is, each ruleset sees it's own set of independent entity variables and values. This poses no limitation to data sharing inside the pico however.

Entity variables free pico programmers from having to worry about databases for common storage needs.

Open Event Network

Events that are sent to a pico are processed on an open event network. The default behavior is for every rule in the pico to see every event and determine, based on it's event expression, whether to select to not. Selected rules are run by the container.

This process is made efficient by a "salience graph" that determines which events are salient for a given event and only returns those that might select. The event expression for those rules is then evaluated to determine if the rule does select.

Open event networks provide for loose coupling between event senders and the pico. In particular, open event networks provide the following powerful aspects:

- Receiver-Driven Flow Control—Once an event generator sends an event notification, its role in determining what happens to that event is over. Downstream event processors may ignore the event, may handle it as appropriate for their domain, or may propagate it to other processors. A single event can induce multiple downstream activities as it and its effects propagate through the event-processing network. Unlike demand-driven (i.e. request-response) interactions, event notifications do not include specific processing instructions. This reversal of semantic responsibility is critical to loose coupling.

- Near Real-Time Propagation—Event processing systems work in real-time in contrast with batch-oriented, fixed-schedule architectures. Events propagate through the network of picos soon after they happen and can further affect how those picos will interpret and react to future events from the same or different event generators. Open event networks thus allow a more natural way to design and create real-time information systems. As more and more information online gains a real-time component, this kind of processing becomes more and more important.

Processing

Picos are only interesting insofar as they do something. Applications, implemented as collections of rulesets, respond to events and access entity variables. These applications often operate without the owner of the pico being present. That is, they operate autonomously.

Processing sets picos apart from many of the other so called personal cloud technologies. Picos are more than just an online storage container or secure data sharing system. Picos run programs in response to their inputs. The results are based on those inputs and the current state as embodied in the entity variables and the context discernible from the APIs. The response might be JSON, other events, or both. They can produce side effects by calling other Web APIs.

Event Channels

Picos communicate with each other via event channels. A pico can create as many event channels as necessary for it's operation. Event channels are one-way, so if two picos need to communicate with each other, they must both create an event channel and supply it to the other in a processes called "symmetric subscription."

Event channels have unique identifiers called "event channel identifiers" or ECIs. Event channels can be independently managed, permissioned, and responded to. Best practice is to create a new event channel for each connection rather than having multiple entities communicate over a single channel.

Event channels have attributes and use attribute-based authorization to control inbound communications.

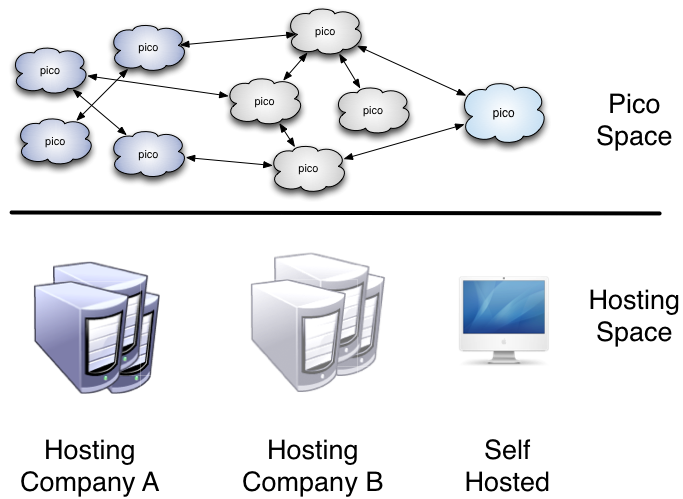

Picos and their event channels are constructed so that there need be no relationship between the pico containers (and their hosters) in order for two picos to share a channel as depicted in the following diagram.

A network of picos can be hosted in containers run by multiple independent entities. Some of these containers may be self-hosted.

These properties allow a collection of picos to create a peer-to-peer network regardless of where they are hosted. At present there is no global indexing scheme for picos, but multiple possibilities are being explored.

APIs

Picos have several different in-bound APIs:

- The Sky Event API is a method of encoding events in HTTP for transport.

- The Sky Mail API encodes events as SMTP messages.

- The Sky Cloud API provides HTTP GET access to functions running inside rulesets in a pico.

All of these APIs are more properly called "meta-APIs" or metaprotocols since they don't define a specific API but rather describe the way APIs will be constructed from the particular rulesets that are installed in a given pico. The API for a given pico depends on the rulesets installed in it.

In addition to these in-bound APIs, rulesets running in a pico can use HTTP to access any other Web-based API making picos powerful integration and application platforms for using the many Web-based APIs available online.

Implementation

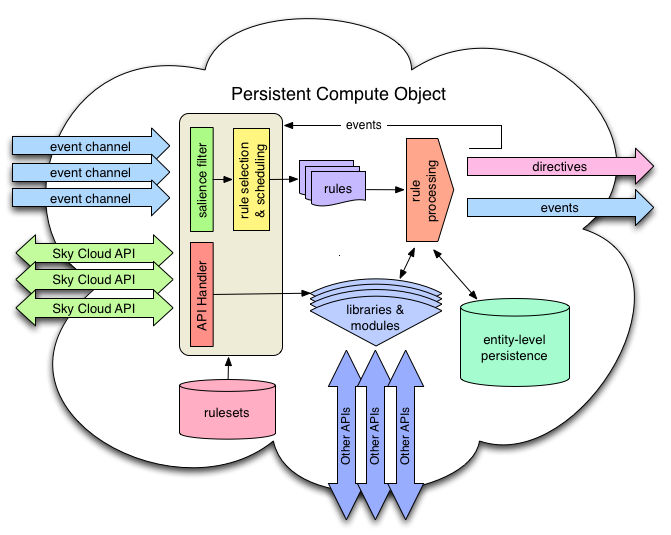

As noted above, KRE is a pico container and provides picos with the fundamental features described above. The following block diagram shows some of the internal components of a pico and their relationship to each other.

A few comments on the diagram:

- Rulesets give rise to the event and API handlers. The libraries and modules are also defined by rulesets. KRL rulesets are the common denominator in the processing that occurs in KRE-hosted picos.

- Rule processing can result in new events which are fed back into the event handler. This results in great power as a rule can cause further rule processing. The allows rules to be used to semantic interpretation of events, event routing, and other higher-level operations on event streams.

Modeling with Picos

As I described in Pot Holes and Picos, picos can be used to model the physical world. The pico that represents a pot hole gets created when the pot hole is first noticed. It need never go away. Even after the pot hole has been fixed, its pico gets updated as "fixed" but the pico and its relationship to the street, the person who reported it, and the crew that fixed it remains, ready to be used. Want to use your Google Glass to see a time lapse of all the pot holes that have come and gone on your street? A road engineer might. In a mirror world built with picos, all the data is available.

Picos can also be used to model concepts, sets, groups, and other computational features. In Building a Blog with Personal Clouds, I describe the use of picos (called "personal clouds" and "PDOs" in the post) to create a blog that could be completely distributed. In such a system, each entry, archive, collection, and search result is modeled as a pico. Note that this is similar to how an object-oriented programmer might use objects to model such a system.

Picos, by their decentralized nature, provide for privacy by design or by structure instead of privacy by agreement. As more and more of our lives are modeled in online systems, this feature will be more and more important.

Networks of interoperating picos provide a natural way to model ownership and other complex relationships that exist in the physical world. For example, consider a world where I have a pico that represents me and another that represents my truck. My neighbor Tom also has a pico that represents him. If he wants to borrow my truck, I may also want to create a relationship between his pico and the truck's pico so that he has access to necessary information and maybe even means to open and start it.

CloudOS

Managing large collections of picos, their interlocking channels, and rulesets can be a big job. We've created an operating system called CloudOS to make that task easier. CloudOS provides a number of services that make developing with picos easier. These include support for the following:

- CloudOS Service—support for creating, managing, and destroying picos, channels, and rulesets.

- Subscription Service—support for creating subscriptions between picos.

- Personal Data—support for managing data within the pico including sharing data between applications. Also provides a common, standard API access to pico data via the Sky Cloud API.

- Notifications—supports inter-pico and intra-pico notifications that can be used to communicate with humans via SMS and email.

CloudOS is designed to run on picos and is written entirely in KRL. While it is possible to use picos without CloudOS, it's not obvious reason to do so. CloudOS supplies important services that simplify developing systems that use picos. Picos running CloudOS are complete in the sense that form a usable system with the right scaffolding for effectively constructing functioning applications.

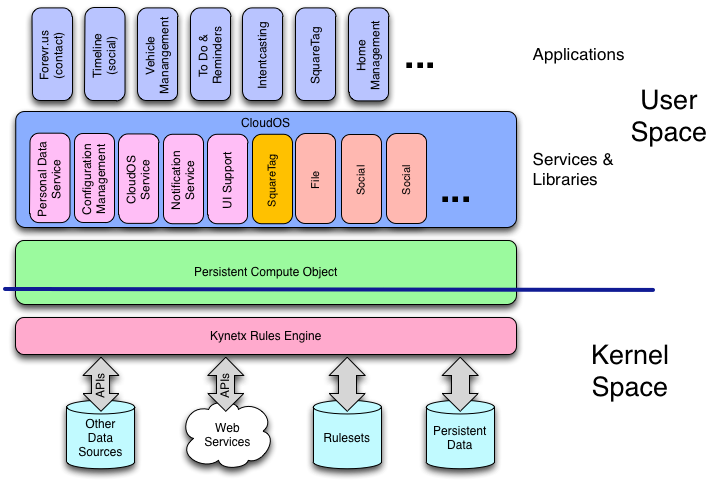

The following diagram shows this relationship with KRE containing the pico and CloudOS running on the pico with applications running on top of the complete stack. The ideas of user and kernel space are imperfectly represented in the diagram, but are real since there are privileged and unprivileged operations that are enforced by KRE on behalf of the pico.

Because CloudOS is written in KRL, it is a more accessible means of extending and completing the pico programming environment that mucking about in the guts of KRE. This has important consequences: Components of CloudOS can be easily replaced with experimental or augmented libraries on specific instances of KRE without the source code for the container having to support them. This provides flexibility and stability as picos are deployed in a variety of containers at many different hosting sites.

Conclusion

Picos provide a powerful way for modeling both physical and conceptual ideas. Inside those models picos give rise to autonomous online systems that operate in real-time and leverage existing Web-based APIs. Picos are more than just "object-oriented programming in the cloud," as powerful as that idea might be, because they are architected to create interoperating systems of autonomous agents that function across accountability boundaries. We continue to explore their power and expand their functionality. We invite you to help.

Notes: I love that "pico" also means "one-trillionth" because I believe that the Internet of Things will be a trillion-node network. I also like the "pico" means "peak" (among other things) in Spanish.